On 30 October 2008, the US Court of Appeals for the Federal Circuit (CAFC) handed down its en banc decision in In re Bilski, affirming the rejection of patent claims directed to a method of hedging risks in commodities trading. I have analysed IP Australia patent examination data, which provides reason to believe that this specific event triggered an unacknowledged change in examination practice within the Australian Patent Office. This change subsequently resulted in a number of published decisions in which claims to computer-implemented ‘business methods’ were found to be unpatentable, and two appeals that went as far as a Full Bench of the Federal Court of Australia. It is also reasonable to suppose that numerous further applications simply lapsed without fanfare following objections raised by patent examiners.

On 30 October 2008, the US Court of Appeals for the Federal Circuit (CAFC) handed down its en banc decision in In re Bilski, affirming the rejection of patent claims directed to a method of hedging risks in commodities trading. I have analysed IP Australia patent examination data, which provides reason to believe that this specific event triggered an unacknowledged change in examination practice within the Australian Patent Office. This change subsequently resulted in a number of published decisions in which claims to computer-implemented ‘business methods’ were found to be unpatentable, and two appeals that went as far as a Full Bench of the Federal Court of Australia. It is also reasonable to suppose that numerous further applications simply lapsed without fanfare following objections raised by patent examiners.Most readers would be aware that the Bilski case went on further appeal to the US Supreme Court, which again confirmed the rejection in an opinion issued on 28 June 2010. The Supreme Court decision was almost immediately cited by an Australian Patent Hearing Officer, who found that a claimed method for commercialising inventions does not constitute patentable subject matter under the Australian ‘manner of manufacture’ test: Invention Pathways Pty Ltd [2010] APO 10.

The Invention Pathways decision resulted in an official change to Australian Patent Office practice whereby a claimed invention, to be patent-eligible, would not only need to involve a ‘concrete effect or phenomenon or manifestation or transformation’ (as per Grant v Commissioner of Patents [2006] FCAFC 120), but one that is ‘significant both in that it is concrete but also that it is central to the purpose or operation of the claimed process or otherwise arises from the combination of steps of the method in a substantial way’.

Invention Pathways was the first of a series of Patent Office decisions refusing or revoking patents for so-called computer-implemented ‘business methods’ based on this logic, including Iowa Lottery [2010] APO 25, Research Affiliates, LLC [2010] APO 31 and [2011] APO 101, Myall Australia Pty Ltd v RPL Central Pty Ltd [2011] APO 48, Discovery Holdings Limited [2011] APO 56, Network Solutions, LLC [2011] APO 65, Jumbo Interactive Ltd & New South Wales Lotteries Corp v Elot, Inc. [2011] APO 82, Sheng-Ping Fang [2011] APO 102, and Celgene Corporation [2013] APO 14. The Research Affiliates and RPL Central matters both ultimately ended up on appeal before a Full Bench of the Federal Court of Australia, which confirmed that the claims in each case were not patent-eligible, albeit for reasons somewhat different from the Patent Office’s ‘centrality of purpose’ test.

While published reasons are the most visible and detailed outcomes of Patent Office decision-making, they typically have their genesis some months – or even years – earlier, with one or more reports issued by examiners. The original Research Affiliates application, for example, was first rejected by a patent examiner on 28 October 2009, eight months prior to the Invention Pathways decision. It is also notable that this type of ex parte hearing, i.e. involving only the patent applicant ‘appealing’ an examiner’s decision, has historically been relatively rare. Prior to Invention Pathways, I count a total of just 11 published decisions of this type under the Patents Act 1990 (which commenced on 1 April 1991). This number roughly doubled in the three years following Invention Pathways, driven by rejections of ‘business method’ applications. It is therefore difficult to avoid inferring that something changed in the prior period that led to this unusual spike in hearings.

The Outcomes of Examination

To investigate possible changes in examination practice, I posed a simple question: once a first examination report has been issued, what is the eventual outcome for an application? Is it ultimately accepted, or does it end up lapsing (because the applicant concedes defeat, gives up or loses interest in the invention) or being refused (as a result of a formal Patent Office decision)?To put this question in statistical terms: across all applications of interest, what proportion of applications are eventually accepted, versus those lapsing or refused, as a function of the date of issue of the first report?

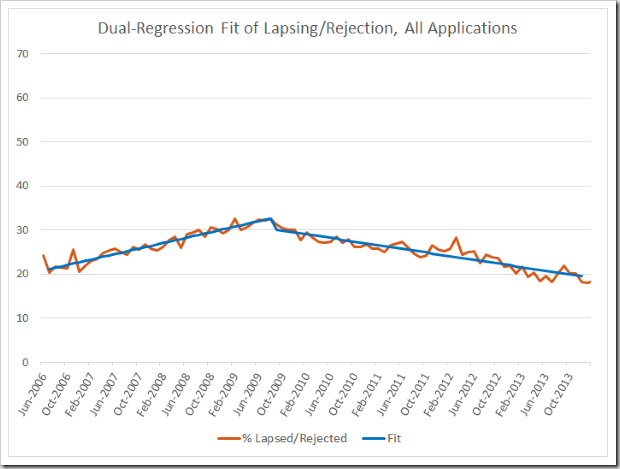

I used the Intellectual Property Government Open Data (IPGOD) 2016 data set to investigate this question. (For more details of this data set, see my earlier article on attorney firm market share.) The graph below shows the results for all applications, in all fields of technology where initial examination reports were issued between January 2006 and December 2015 (which is the last month covered by the current IPGOD data set), grouped by the month of the first report. Obviously, close to the end date of the data, an increasing number of applications are still pending (i.e. have not yet lapsed or been accepted), however it is apparent that examination has been finalised one way or another where the first report issued prior to January 2014.

One thing that is immediately notable is that it appears to have been getting ‘harder’ to obtain a patent in Australia during the period prior to mid-2009, with the proportion of applications lapsing or rejected rising from around 20% to a little over 30%. However, this trend seems to have abruptly reversed with lapsed/rejected rates falling below 2006 levels by the end of 2013. I do not presently have an explanation for this apparent break in the data, and would welcome any suggestions. (Additionally, if you are interested in an analysis of the break establishing its statistical significance, see the Appendix at the end of this article.)

Whatever their origins, the general trends in the graph above provide a backdrop to the real questions of interest to me: what happened with ‘business method’ applications prior to 2010 and when, exactly, did it happen?

The Fate of ‘Business Method’ Applications

International Patent Classification (IPC) subclass G06Q covers:Data processing systems or methods, specially adapted for administrative, commercial, financial, managerial, supervisory or forecasting purposes; systems or methods specially adapted for administrative, commercial, financial, managerial, supervisory or forecasting purposes, not otherwise provided for

Although some inventions that may be regarded as ‘computer-implemented business processes’ may fall under other sections of the IPC, subclass G06Q evidently includes a large population of such inventions. It thus provides a useful sample of data for investigation of how applications within this technology area may be treated relative to the general body of applications reflected in the above graph. I therefore extracted from the full set only those applications classified (as either primary or secondary classification) within G06Q, and plotted the corresponding graph shown below.

A few points may be noted just from observation of the graph.

- The data is quite ‘noisy’. This is due simply to the relatively small number of applications in the restricted IPC subclass. Over the period shown, the total number of initial examination reports issued on applications in G06Q each month is typically in the range 50-100, compared with around 1500-2000 across all applications.

- Although somewhat obscured by the fluctuations, the data for G06Q appear to exhibit the same general trend as seen more generally for all applications, i.e. an initial rise in the number of cases lapsed/rejected, with a subsequent decline from 2009.

- Further, and again despite the noise, it does appear as though there is some transition around late 2009, where there is a notable rise in the number of lapsed/rejected applications.

Analysis of ‘Business Method’ Examination Outcomes

In view of the third observation above, I conducted an analysis to determine whether the data shown in the chart above exhibits a statistically-significant step change and, if so, in what month that change most likely occurred. Further details are set out in the Appendix to this article, but the short answers are:- yes, there is a clear shift in the time series;

- the most likely month in which this shift was initiated is November 2008;

- the change is highly statistically significant – the probability that the apparent break in the data is merely due to random variations in an underlying consistent trend is around 0.00003.

An application classified in IPC subclass G06Q which received a first examination report in November 2008 was 15% more likely to end up lapsed or rejected than an application receiving a first report in the previous month. The proportion of lapsed/rejected applications in this subclass subsequently declined. This could be due to whatever general factors caused a similar decline across all applications, or it could be due to factors specific to this group of applications, such as changes in applicant behaviour resulting from the increase in examination objections.

Notably, there is no evidence of any further change following the Invention Pathways decision in 2010, i.e. the existence of a growing number of formal written decisions that were added to the published Patent Office guidance, and to which examiners could refer, appears to have had no additional impact on rates of lapsed/rejected applications. This supports my hypothesis that these decisions were a consequence of changes in practice that had already occurred prior to Invention Pathways, and were not themselves a cause of change.

Conclusion – Data Does Not Lie!

When I had an on-the-record conversation with the Commissioner of Patents back in 2013, I asked directly whether there had been an unannounced change in practice around 2008-2009 in relation to the examination of ‘business method’ patents. Here is how that part of the interview went:Mark Summerfield: ... to the extent you can comment, was there something in particular that led to this change in practice – this tightening up – of the way in which the manner of manufacture test was applied in relation to that subject matter?

Fatima Beattie: I can’t comment specifically on any of the cases that you might be alluding to. What I can say is that the Office practice is very much about understanding the government’s policy objectives as they are translated into the legislation that we administer. We interpret the legislation in the context of the policy objectives and judicial guidance in relation to the legislation.

MS: Not looking at the particular cases I guess, but just at – in terms of judicial guidance – there was nothing between 2006, when the Grant decision came out, and 2008. And normally when there’s a change of practice within the Office – such as there was, for example, with inventive step – that is flagged through an Official Notice, or through amendments to the Examiners Manual. Yet there didn’t seem to be any sort of indication that applications that people might have expected would have gone through, on their past experience, were now going to be subject to perhaps a slightly different approach, and a tightening up of the guidelines…

FB: Again, I think we need to note that we interpret the legislation in the context of the policy objective and the judicial guidance, and there has been judicial guidance, which has resulted in the practice that we have. Depending on what comes out of the court cases that are proceeding at the moment, further changes in practice may result. The government also has the option to change the legislation if the policy objective is not being served by the current legislation.

It is worth reiterating that, as of mid-2008, there had been no new ‘judicial guidance’ to cause any change in practice since the Full Court’s Grant decision on 18 July 2006. Thus, one interpretation of all of this talk of ‘policy objectives’ is that some form of directive came down from the government (bearing in mind that this was during the early days of the first Rudd Labor government). On the other hand, IP Australia has for some time been a major source of advice to government on IP policy matters, and so even a directive straight from the Minister could well have been the result of a process initiated by IP Australia.

Alternatively, the change could have been a response to the most obvious potential catalyst at the time, the US CAFC ruling in Bilski. Though while this might be ‘judicial guidance’, it is not Australian judicial guidance!

I believe that stakeholders in our patent system have a right to be informed of significant changes in practice – up-front, and not two years later when a case finally results in a published Patent Office decision. Applicants who paid for applications that ended up being rejected as a result of an undisclosed practice change are entitled to feel particularly hard done by.

We live in a time when citizens are increasingly suspicious of government, and when the more politicians and bureaucrats talk about ‘transparency’, the less they seem to practice it. The analysis is irrefutable – something happened in or around November 2008 in relation to the Australian Patent Office’s examination of so-called ‘business methods’. The data does not lie! It would be nice, even at this late stage, to receive some official acknowledgement and explanation of this.

Appendix: Statistical Analysis

The following is a brief outline of my analysis of the IPGOD data to produce the results discussed in the main article above.My hypothesis was that there had been a change in examination practice in relation to applications broadly categorised as relating to ‘business methods’ (typically via computer-implementation) sometime around 2008-2009. If so, I expected that this would be observable in the outcomes of examinations commenced before and after the change. In the field of econometrics, a shift in a time series resulting from some event that affects the process producing the time series is commonly called a ‘structural break’. I adopt the same terminology here.

The series before and after a structural break is often modelled using two separate sets of regression parameters. In my case I applied dual linear regression to the examinations data for applications classified in IPC subclass G06Q up to December 2013, after which the number of still-pending applications within the data set starts to have a significant effect. In order to find the most likely month at which the break occurred I ran the regression for all months, and identified the one for which the overall squared-error was lowest. This turned out to be November 2008.

The null hypothesis, H0, in this case is that the data can actually be represented by a single regression, and that what appears to be a possible structural break is actually nothing more than random variation. I used the Chow test to determine whether to reject H0 (Gregory C Chow, ‘Tests of Equality Between Sets of Coefficients in Two Linear Regressions’, Econometrica, Vol. 28, No. 3 (July 1960), pp. 591-605). The value of the Chow test statistic for the G06Q data is 11.7, which corresponds to a p-value of 2.9x10-5.

The Chow test assumes that the variance of the data before and after the structural break is the same (a property formally known as ‘homoscedasticity’). To check this, I applied Levene’s test, in view of the fact that the more common F-test for equality of variances that many of us learned back at school or university is unreliable unless the random variable is strictly drawn from a normal distribution. I have no reason to be confident that the examination outcome data is normally-distributed, and the sample size is too small to test. The actual variances for the samples before and after November 2008 were remarkably similar (89.1 and 89.7 respectively). Applying Levene’s test the p-value is 0.76, confirming that we should accept the null hypothesis of homoscedasticity.

Thus we can confidently reject H0, and conclude that the dual-regression model provides a better explanation of the data.

I applied the same analysis to the examinations data for all applications and confirmed what should hardly come as a surprise simply based on visual inspection of the data – that a dual-regression model is also the better explanation of this data (the p-value was so small in this case that it could not be distinguished from zero using double precision computations). The ‘all applications’ lapsed/rejected data with the corresponding dual regression is shown below.

Before You Go…

Thank you for reading this article to the end – I hope you enjoyed it, and found it useful. Almost every article I post here takes a few hours of my time to research and write, and I have never felt the need to ask for anything in return.

But now – for the first, and perhaps only, time – I am asking for a favour. If you are a patent attorney, examiner, or other professional who is experienced in reading and interpreting patent claims, I could really use your help with my PhD research. My project involves applying artificial intelligence to analyse patent claim scope systematically, with the goal of better understanding how different legal and regulatory choices influence the boundaries of patent protection. But I need data to train my models, and that is where you can potentially assist me. If every qualified person who reads this request could spare just a couple of hours over the next few weeks, I could gather all the data I need.

The task itself is straightforward and web-based – I am asking participants to compare pairs of patent claims and evaluate their relative scope, using an online application that I have designed and implemented over the past few months. No special knowledge is required beyond the ability to read and understand patent claims in technical fields with which you are familiar. You might even find it to be fun!

There is more information on the project website, at claimscopeproject.net. In particular, you can read:

- a detailed description of the study, its goals and benefits; and

- instructions for the use of the online claim comparison application.

Thank you for considering this request!

Mark Summerfield

0 comments:

Post a Comment